ROSE | Robots and the Future of Welfare Services

- Funded by: Academy of Finland

- Partners: Aalto University (PI: Prof. Ville Kyrki), LUT-University, Tampere University of Technology, University of Tampere, VTT, Laurea

- Role: Postdoctoral researcher, involvement in research supervision (from Sep 2017)

- Period: May 2015–Apr 2021

abstract

In post-industrial societies such as Finland the demand for welfare and health services is growing strongly and health and welfare services cover the majority of public expenditure. Service robots are believed to have great potential for the area, increasing productivity and enabling quality improvements and new business through novel services. The application area is nevertheless challenging due to the centrality of ethical, legal and social issues.

ROSE project is a multidisciplinary study how advances in service robotics allow product and service innovation and renewal of welfare services, when such services are developed ethically and jointly with stakeholders. The development is studied on individual, institutional and societal levels, taking into account user needs, ethical issues, technological maturity, and the health care service system.

related publications

journal articles

- RA-L

DDGC: Generative Deep Dexterous Grasping in ClutterJens Lundell, Francesco Verdoja, and Ville KyrkiIEEE Robotics and Automation Letters, Oct 2021

DDGC: Generative Deep Dexterous Grasping in ClutterJens Lundell, Francesco Verdoja, and Ville KyrkiIEEE Robotics and Automation Letters, Oct 2021Recent advances in multi-fingered robotic grasping have enabled fast 6-Degrees-Of-Freedom (DOF) single object grasping. Multi-finger grasping in cluttered scenes, on the other hand, remains mostly unexplored due to the added difficulty of reasoning over obstacles which greatly increases the computational time to generate high-quality collision-free grasps. In this work we address such limitations by introducing DDGC, a fast generative multi-finger grasp sampling method that can generate high quality grasps in cluttered scenes from a single RGB-D image. DDGC is built as a network that encodes scene information to produce coarse-to-fine collision-free grasp poses and configurations. We experimentally benchmark DDGC against the simulated-annealing planner in GraspIt! on 1200 simulated cluttered scenes and 7 real world scenes. The results show that DDGC outperforms the baseline on synthesizing high-quality grasps and removing clutter while being 5 times faster. This, in turn, opens up the door for using multi-finger grasps in practical applications which has so far been limited due to the excessive computation time needed by other methods.

@article{202110_lundell_ddgc, title = {{DDGC}: {Generative} {Deep} {Dexterous} {Grasping} in {Clutter}}, volume = {6}, shorttitle = {{DDGC}}, doi = {10.1109/LRA.2021.3096239}, number = {4}, journal = {IEEE Robotics and Automation Letters}, publisher = {IEEE}, author = {Lundell, Jens and Verdoja, Francesco and Kyrki, Ville}, month = oct, year = {2021}, pages = {6899--6906}, } - RA-L

Probabilistic Surface Friction Estimation Based on Visual and Haptic MeasurementsTran Nguyen Le, Francesco Verdoja, Fares J. Abu-Dakka, and Ville KyrkiIEEE Robotics and Automation Letters, Apr 2021

Probabilistic Surface Friction Estimation Based on Visual and Haptic MeasurementsTran Nguyen Le, Francesco Verdoja, Fares J. Abu-Dakka, and Ville KyrkiIEEE Robotics and Automation Letters, Apr 2021Accurately modeling local surface properties of objects is crucial to many robotic applications, from grasping to material recognition. Surface properties like friction are however difficult to estimate, as visual observation of the object does not convey enough information over these properties. In contrast, haptic exploration is time consuming as it only provides information relevant to the explored parts of the object. In this work, we propose a joint visuo-haptic object model that enables the estimation of surface friction coefficient over an entire object by exploiting the correlation of visual and haptic information, together with a limited haptic exploration by a robotic arm. We demonstrate the validity of the proposed method by showing its ability to estimate varying friction coefficients on a range of real multi-material objects. Furthermore, we illustrate how the estimated friction coefficients can improve grasping success rate by guiding a grasp planner toward high friction areas.

@article{202104_nguyen_le_probabilistic, title = {Probabilistic {Surface} {Friction} {Estimation} {Based} on {Visual} and {Haptic} {Measurements}}, volume = {6}, doi = {10.1109/LRA.2021.3062585}, number = {2}, journal = {IEEE Robotics and Automation Letters}, publisher = {IEEE}, author = {Nguyen Le, Tran and Verdoja, Francesco and Abu-Dakka, Fares J. and Kyrki, Ville}, month = apr, year = {2021}, pages = {2838--2845}, }

conference articles

- ECMR

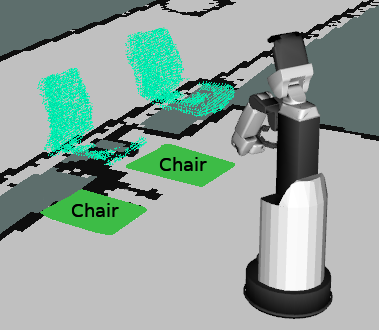

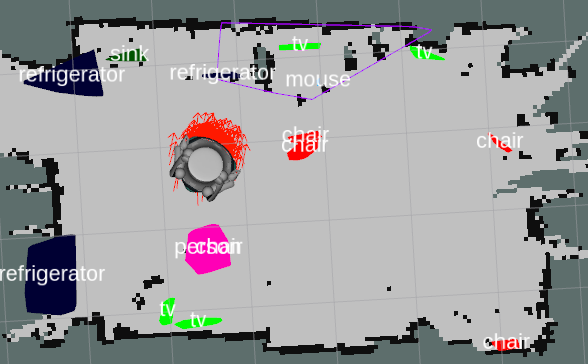

Online Object-Oriented Semantic Mapping and Map UpdatingNils Dengler, Tobias Zaenker, Francesco Verdoja, and Maren BennewitzIn 2021 Eur. Conf. on Mobile Robots (ECMR), Aug 2021

Online Object-Oriented Semantic Mapping and Map UpdatingNils Dengler, Tobias Zaenker, Francesco Verdoja, and Maren BennewitzIn 2021 Eur. Conf. on Mobile Robots (ECMR), Aug 2021Creating and maintaining an accurate representation of the environment is an essential capability for every service robot. Especially for household robots acting in indoor environments, semantic information is important. In this paper, we present a semantic mapping framework with modular map representations. Our system is capable of online mapping and object updating given object detections from RGB-D data and provides various 2D and 3D representations of the mapped objects. To undo wrong data associations, we perform a refinement step when updating object shapes. Furthermore, we maintain an existence likelihood for each object to deal with false positive and false negative detections and keep the map updated. Our mapping system is highly efficient and achieves a run time of more than 10 Hz. We evaluated our approach in various environments using two different robots, i.e., a Toyota HSR and a Fraunhofer Care-O-Bot-4. As the experimental results demonstrate, our system is able to generate maps that are close to the ground truth and outperforms an existing approach in terms of intersection over union, different distance metrics, and the number of correct object mappings.

@inproceedings{202108_dengler_online, title = {Online {Object}-{Oriented} {Semantic} {Mapping} and {Map} {Updating}}, doi = {10.1109/ECMR50962.2021.9568817}, booktitle = {2021 {Eur.} {Conf.} on {Mobile} {Robots} ({ECMR})}, author = {Dengler, Nils and Zaenker, Tobias and Verdoja, Francesco and Bennewitz, Maren}, month = aug, year = {2021}, } - ICRA

Multi-FinGAN: Generative Coarse-To-Fine Sampling of Multi-Finger GraspsJens Lundell, Enric Corona, Tran Nguyen Le, Francesco Verdoja, Philippe Weinzaepfel, and 3 more authorsIn 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2021

Multi-FinGAN: Generative Coarse-To-Fine Sampling of Multi-Finger GraspsJens Lundell, Enric Corona, Tran Nguyen Le, Francesco Verdoja, Philippe Weinzaepfel, and 3 more authorsIn 2021 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2021While there exists many methods for manipulating rigid objects with parallel-jaw grippers, grasping with multi-finger robotic hands remains a quite unexplored research topic. Reasoning and planning collision-free trajectories on the additional degrees of freedom of several fingers represents an important challenge that, so far, involves computationally costly and slow processes. In this work, we present Multi-FinGAN, a fast generative multi-finger grasp sampling method that synthesizes high quality grasps directly from RGB-D images in about a second. We achieve this by training in an end-to-end fashion a coarse-to-fine model composed of a classification network that distinguishes grasp types according to a specific taxonomy and a refinement network that produces refined grasp poses and joint angles. We experimentally validate and benchmark our method against a standard grasp-sampling method on 790 grasps in simulation and 20 grasps on a real Franka Emika Panda. All experimental results using our method show consistent improvements both in terms of grasp quality metrics and grasp success rate. Remarkably, our approach is up to 20-30 times faster than the baseline, a significant improvement that opens the door to feedback-based grasp re-planning and task informative grasping. Code is available at this https URL.

@inproceedings{202105_lundell_multi-fingan, title = {Multi-{FinGAN}: {Generative} {Coarse}-{To}-{Fine} {Sampling} of {Multi}-{Finger} {Grasps}}, shorttitle = {Multi-{FinGAN}}, doi = {10.1109/ICRA48506.2021.9561228}, booktitle = {2021 {IEEE} {Int.} {Conf.} on {Robotics} and {Automation} ({ICRA})}, publisher = {IEEE}, author = {Lundell, Jens and Corona, Enric and Nguyen Le, Tran and Verdoja, Francesco and Weinzaepfel, Philippe and Rogez, Grégory and Moreno-Noguer, Francesc and Kyrki, Ville}, month = may, year = {2021}, pages = {4495--4501}, } - MFI

Hypermap Mapping Framework and its Application to Autonomous Semantic ExplorationTobias Zaenker, Francesco Verdoja, and Ville KyrkiIn 2020 IEEE Int. Conf. on Multisensor Fusion and Integration for Intelligent Systems (MFI), Sep 2020

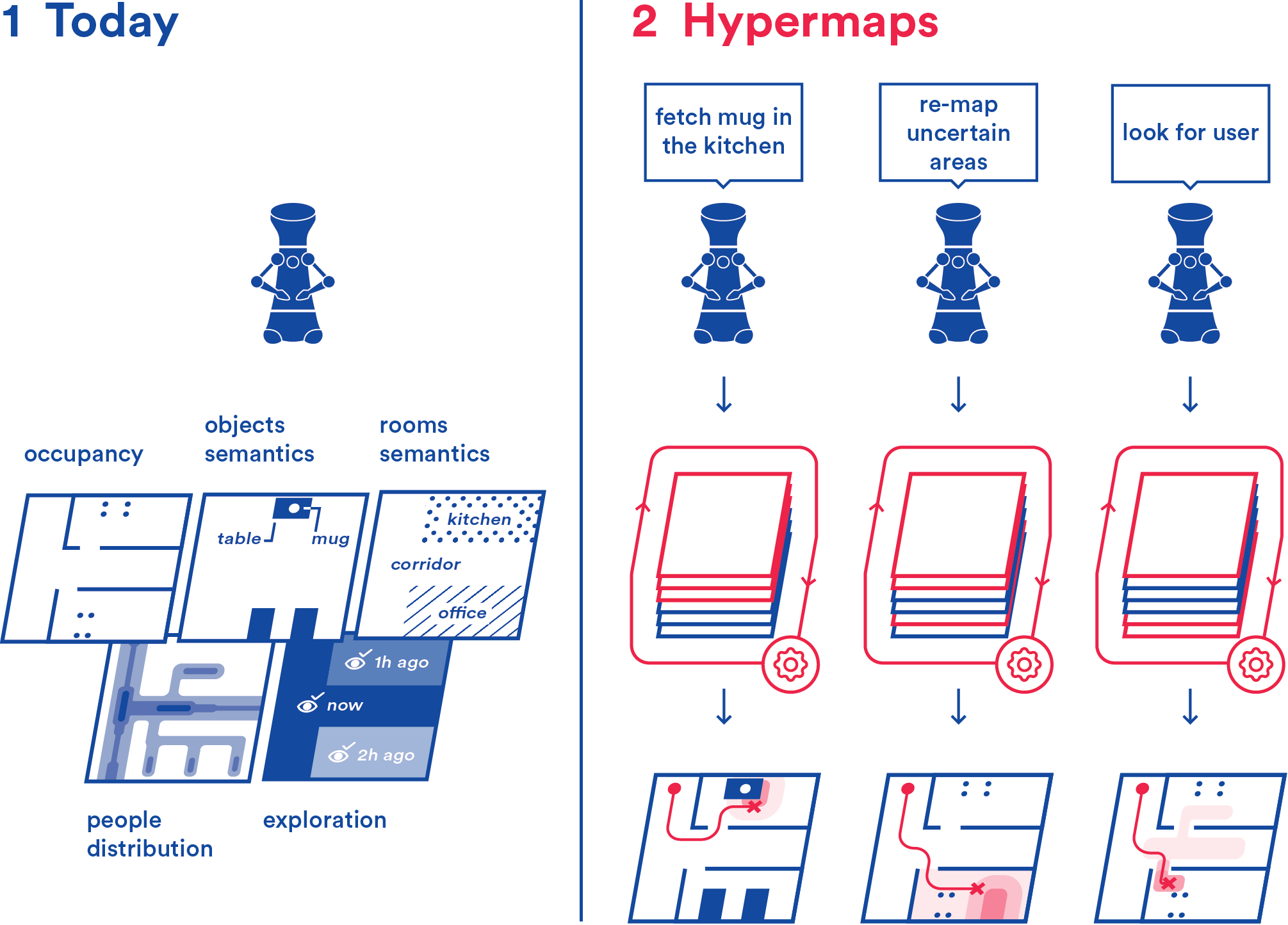

Hypermap Mapping Framework and its Application to Autonomous Semantic ExplorationTobias Zaenker, Francesco Verdoja, and Ville KyrkiIn 2020 IEEE Int. Conf. on Multisensor Fusion and Integration for Intelligent Systems (MFI), Sep 2020Modern intelligent and autonomous robotic applications often require robots to have more information about their environment than that provided by traditional occupancy grid maps. For example, a robot tasked to perform autonomous semantic exploration has to label objects in the environment it is traversing while autonomously navigating. To solve this task the robot needs to at least maintain an occupancy map of the environment for navigation, an exploration map keeping track of which areas have already been visited, and a semantic map where locations and labels of objects in the environment are recorded. As the number of maps required grows, an application has to know and handle different map representations, which can be a burden.We present the Hypermap framework, which can manage multiple maps of different types. In this work, we explore the capabilities of the framework to handle occupancy grid layers and semantic polygonal layers, but the framework can be extended with new layer types in the future. Additionally, we present an algorithm to automatically generate semantic layers from RGB-D images. We demonstrate the utility of the framework using the example of autonomous exploration for semantic mapping.

@inproceedings{202009_zaenker_hypermap, title = {Hypermap {Mapping} {Framework} and its {Application} to {Autonomous} {Semantic} {Exploration}}, doi = {10.1109/MFI49285.2020.9235231}, booktitle = {2020 {IEEE} {Int.} {Conf.} on {Multisensor} {Fusion} and {Integration} for {Intelligent} {Systems} ({MFI})}, publisher = {IEEE}, author = {Zaenker, Tobias and Verdoja, Francesco and Kyrki, Ville}, month = sep, year = {2020}, pages = {133--139}, } - ICRA

Beyond Top-Grasps Through Scene CompletionJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2020 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2020

Beyond Top-Grasps Through Scene CompletionJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2020 IEEE Int. Conf. on Robotics and Automation (ICRA), May 2020Current end-to-end grasp planning methods propose grasps in the order of seconds that attain high grasp success rates on a diverse set of objects, but often by constraining the workspace to top-grasps. In this work, we present a method that allows end-to-end top-grasp planning methods to generate full six-degree-of-freedom grasps using a single RGBD view as input. This is achieved by estimating the complete shape of the object to be grasped, then simulating different viewpoints of the object, passing the simulated viewpoints to an end-to-end grasp generation method, and finally executing the overall best grasp. The method was experimentally validated on a Franka Emika Panda by comparing 429 grasps generated by the state-of-the-art Fully Convolutional Grasp Quality CNN, both on simulated and real camera images. The results show statistically significant improvements in terms of grasp success rate when using simulated images over real camera images, especially when the real camera viewpoint is angled.

@inproceedings{202005_lundell_beyond, title = {Beyond {Top}-{Grasps} {Through} {Scene} {Completion}}, doi = {10.1109/ICRA40945.2020.9197320}, booktitle = {2020 {IEEE} {Int.} {Conf.} on {Robotics} and {Automation} ({ICRA})}, publisher = {IEEE}, author = {Lundell, Jens and Verdoja, Francesco and Kyrki, Ville}, month = may, year = {2020}, pages = {545--551}, } - IROS

Robust Grasp Planning Over Uncertain Shape CompletionsJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Nov 2019

Robust Grasp Planning Over Uncertain Shape CompletionsJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2019 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Nov 2019We present a method for planning robust grasps over uncertain shape completed objects. For shape completion, a deep neural network is trained to take a partial view of the object as input and outputs the completed shape as a voxel grid. The key part of the network is dropout layers which are enabled not only during training but also at run-time to generate a set of shape samples representing the shape uncertainty through Monte Carlo sampling. Given the set of shape completed objects, we generate grasp candidates on the mean object shape but evaluate them based on their joint performance in terms of analytical grasp metrics on all the shape candidates. We experimentally validate and benchmark our method against another state-of-the-art method with a Barrett hand on 90000 grasps in simulation and 200 grasps on a real Franka Emika Panda. All experimental results show statistically significant improvements both in terms of grasp quality metrics and grasp success rate, demonstrating that planning shape-uncertainty-aware grasps brings significant advantages over solely planning on a single shape estimate, especially when dealing with complex or unknown objects.

@inproceedings{201911_lundell_robust, address = {Macau, China}, title = {Robust {Grasp} {Planning} {Over} {Uncertain} {Shape} {Completions}}, doi = {10.1109/IROS40897.2019.8967816}, booktitle = {2019 {IEEE}/{RSJ} {Int.}\ {Conf.}\ on {Intelligent} {Robots} and {Systems} ({IROS})}, publisher = {IEEE}, author = {Lundell, Jens and Verdoja, Francesco and Kyrki, Ville}, month = nov, year = {2019}, pages = {1526--1532}, } - Humanoids

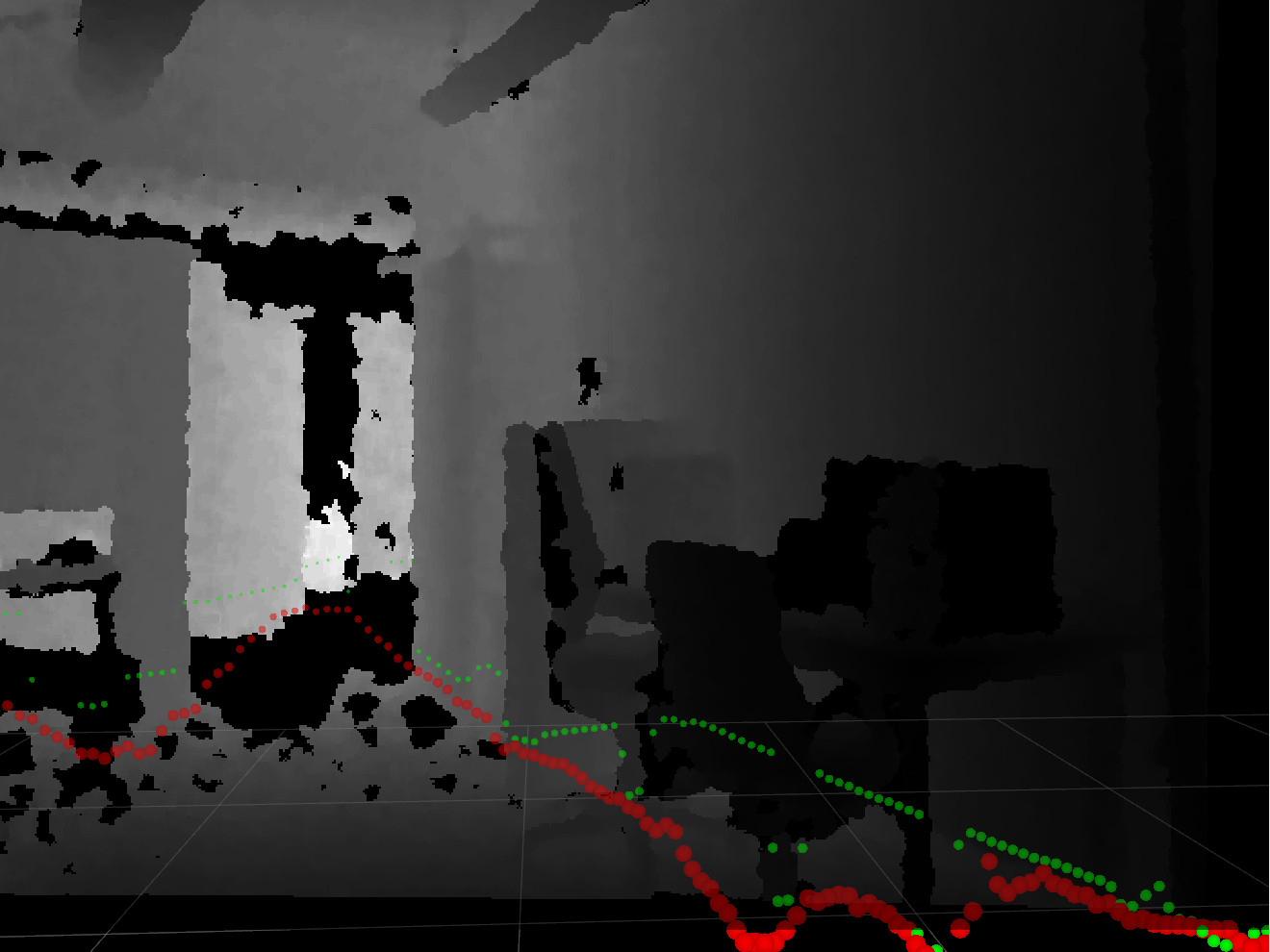

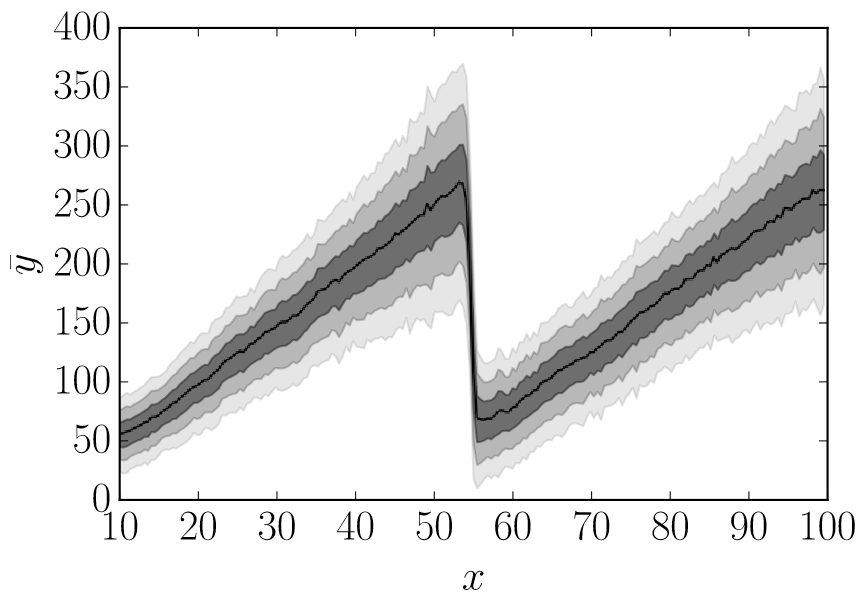

Deep Network Uncertainty Maps for Indoor NavigationFrancesco Verdoja, Jens Lundell, and Ville KyrkiIn 2019 IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), Oct 2019

Deep Network Uncertainty Maps for Indoor NavigationFrancesco Verdoja, Jens Lundell, and Ville KyrkiIn 2019 IEEE-RAS Int. Conf. on Humanoid Robots (Humanoids), Oct 2019Most mobile robots for indoor use rely on 2D laser scanners for localization, mapping and navigation. These sensors, however, cannot detect transparent surfaces or measure the full occupancy of complex objects such as tables. Deep Neural Networks have recently been proposed to overcome this limitation by learning to estimate object occupancy. These estimates are nevertheless subject to uncertainty, making the evaluation of their confidence an important issue for these measures to be useful for autonomous navigation and mapping. In this work we approach the problem from two sides. First we discuss uncertainty estimation in deep models, proposing a solution based on a fully convolutional neural network. The proposed architecture is not restricted by the assumption that the uncertainty follows a Gaussian model, as in the case of many popular solutions for deep model uncertainty estimation, such as Monte-Carlo Dropout. We present results showing that uncertainty over obstacle distances is actually better modeled with a Laplace distribution. Then, we propose a novel approach to build maps based on Deep Neural Network uncertainty models. In particular, we present an algorithm to build a map that includes information over obstacle distance estimates while taking into account the level of uncertainty in each estimate. We show how the constructed map can be used to increase global navigation safety by planning trajectories which avoid areas of high uncertainty, enabling higher autonomy for mobile robots in indoor settings.

@inproceedings{201910_verdoja_deep, address = {Toronto, Canada}, title = {Deep {Network} {Uncertainty} {Maps} for {Indoor} {Navigation}}, doi = {10.1109/Humanoids43949.2019.9035016}, booktitle = {2019 {IEEE-RAS} {Int.}\ {Conf.}\ on {Humanoid} {Robots} ({Humanoids})}, publisher = {IEEE}, author = {Verdoja, Francesco and Lundell, Jens and Kyrki, Ville}, month = oct, year = {2019}, pages = {112--119}, } - IROS

Hallucinating robots: Inferring obstacle distances from partial laser measurementsJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Oct 2018

Hallucinating robots: Inferring obstacle distances from partial laser measurementsJens Lundell, Francesco Verdoja, and Ville KyrkiIn 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Oct 2018Many mobile robots rely on 2D laser scanners for localization, mapping, and navigation. However, those sensors are unable to correctly provide distance to obstacles such as glass panels and tables whose actual occupancy is invisible at the height the sensor is measuring. In this work, instead of estimating the distance to obstacles from richer sensor readings such as 3D lasers or RGBD sensors, we present a method to estimate the distance directly from raw 2D laser data. To learn a mapping from raw 2D laser distances to obstacle distances we frame the problem as a learning task and train a neural network formed as an autoencoder. A novel configuration of network hyperparameters is proposed for the task at hand and is quantitatively validated on a test set. Finally, we qualitatively demonstrate in real time on a Care-O-bot 4 that the trained network can successfully infer obstacle distances from partial 2D laser readings.

@inproceedings{201810_lundell_hallucinating, address = {Madrid, Spain}, title = {Hallucinating robots: {Inferring} obstacle distances from partial laser measurements}, doi = {10.1109/IROS.2018.8594399}, booktitle = {2018 {IEEE}/{RSJ} {Int.}\ {Conf.}\ on {Intelligent} {Robots} and {Systems} ({IROS})}, publisher = {IEEE}, author = {Lundell, Jens and Verdoja, Francesco and Kyrki, Ville}, month = oct, year = {2018}, pages = {4781--4787}, }

workshop articles

- ICML

Notes on the Behavior of MC DropoutFrancesco Verdoja and Ville KyrkiJul 2021Presented at the “Uncertainty and Robustness in Deep Learning (UDL)” workshop at the 2021 Int. Conf. on Machine Learning (ICML)

Notes on the Behavior of MC DropoutFrancesco Verdoja and Ville KyrkiJul 2021Presented at the “Uncertainty and Robustness in Deep Learning (UDL)” workshop at the 2021 Int. Conf. on Machine Learning (ICML)Among the various options to estimate uncertainty in deep neural networks, Monte-Carlo dropout is widely popular for its simplicity and effectiveness. However the quality of the uncertainty estimated through this method varies and choices in architecture design and in training procedures have to be carefully considered and tested to obtain satisfactory results. In this paper we present a study offering a different point of view on the behavior of Monte-Carlo dropout, which enables us to observe a few interesting properties of the technique to keep in mind when considering its use for uncertainty estimation.

@online{202107_verdoja_notes, title = {Notes on the {Behavior} of {MC} {Dropout}}, url = {https://sites.google.com/view/udlworkshop2021}, author = {Verdoja, Francesco and Kyrki, Ville}, month = jul, year = {2021}, note = {Presented at the ``Uncertainty and Robustness in Deep Learning (UDL)'' workshop at the 2021 Int.\ Conf.\ on Machine Learning (ICML)}, } - ICRA

On the Potential of Smarter Multi-layer MapsFrancesco Verdoja and Ville KyrkiMay 2020Presented at the “Perception, Action, Learning (PAL)” workshop at the 2020 IEEE Int. Conf. on Robotics and Automation (ICRA)

On the Potential of Smarter Multi-layer MapsFrancesco Verdoja and Ville KyrkiMay 2020Presented at the “Perception, Action, Learning (PAL)” workshop at the 2020 IEEE Int. Conf. on Robotics and Automation (ICRA)The most common way for robots to handle environmental information is by using maps. At present, each kind of data is hosted on a separate map, which complicates planning because a robot attempting to perform a task needs to access and process information from many different maps. Also, most often correlation among the information contained in maps obtained from different sources is not evaluated or exploited. In this paper, we argue that in robotics a shift from single-source maps to a multi-layer mapping formalism has the potential to revolutionize the way robots interact with knowledge about their environment. This observation stems from the raise in metric-semantic mapping research, but expands to include in its formulation also layers containing other information sources, e.g., people flow, room semantic, or environment topology. Such multi-layer maps, here named hypermaps, not only can ease processing spatial data information but they can bring added benefits arising from the interaction between maps. We imagine that a new research direction grounded in such multi-layer mapping formalism for robots can use artificial intelligence to process the information it stores to present to the robot task-specific information simplifying planning and bringing us one step closer to high-level reasoning in robots.

@online{202005_verdoja_potential, title = {On the {Potential} of {Smarter} {Multi}-layer {Maps}}, url = {https://mit-spark.github.io/PAL-ICRA2020}, author = {Verdoja, Francesco and Kyrki, Ville}, month = may, year = {2020}, note = {Presented at the ``Perception, Action, Learning (PAL)'' workshop at the 2020 IEEE Int.\ Conf.\ on Robotics and Automation (ICRA)}, }

technical reports

-

Robots and the Future of Welfare Services: A Finnish RoadmapVille Kyrki, Iina Aaltonen, Antti Ainasoja, Päivi Heikkilä, Sari Heikkinen, and 31 more authorsMay 2021

Robots and the Future of Welfare Services: A Finnish RoadmapVille Kyrki, Iina Aaltonen, Antti Ainasoja, Päivi Heikkilä, Sari Heikkinen, and 31 more authorsMay 2021This roadmap summarises a six-year multidisciplinary research project called Robots and the Future of Welfare Services (ROSE), funded by the Strategic Research Council (SRC) established within the Academy of Finland. The objective of the project was to study the current and expected technical opportunities and applications of robotics in welfare services, particularly in care services for older people. The research was carried out at three levels: individual, organisational and societal. The roadmap provides highlights of the various research activities of ROSE. We have studied the perspectives of older adults and care professionals as users of robots, how care organisations are able to adopt and utilise robots in their services, how technology companies find robots as business opportunity, and how the care robotics innovation ecosystem is evolving. Based on these and other studies, we evaluate the development and use of robots in care for older adults in terms of social, ethical-philosophical and political impacts as well as the public discussion on care robots. It appears that there are many single- or limited-purpose robot applications already commercially available in care services for older adults. To be widely adopted, robots should still increase maturity to be able to meet the requirements of care environments, such as in terms of their ability to move in smaller crowded spaces, easy and natural user interaction, and task flexibility. The roadmap provides visions of what could be technically expected in five and ten years. However, at the same time, organisations’ capabilities of adopting new technology and integrating it into services should be supported for them to be able to realise the potential of robots for the benefits of care workers and older persons, as well as the whole society. This roadmap also provides insight into the wider impacts and risks of robotization in society and how to steer it in a responsible way, presented as eight policy recommendations. We also discuss the ROSE project research as a multidisciplinary activity and present lessons learnt.

@techreport{202100_kyrki_robots, title = {Robots and the {Future} of {Welfare} {Services}: {A} {Finnish} {Roadmap}}, shorttitle = {Robots and the {Future} of {Welfare} {Services}}, number = {4}, institution = {Aalto University}, author = {Kyrki, Ville and Aaltonen, Iina and Ainasoja, Antti and Heikkilä, Päivi and Heikkinen, Sari and Hennala, Lea and Koistinen, Pertti and Kämäräinen, Joni and Laakso, Kalle and Laitinen, Arto and Lammi, Hanna and Lanne, Marinka and Lappalainen, Inka and Lehtinen, Hannu and Lehto, Paula and Leppälahti, Teppo and Lundell, Jens and Melkas, Helinä and Niemelä, Marketta and Parjanen, Satu and Parviainen, Jaana and Pekkarinen, Satu and Pirhonen, Jari and Porokuokka, Jaakko and Rantanen, Teemu and Ruohomäki, Ismo and Saurio, Riika and Sahlgren, Otto and Särkikoski, Tuomo and Talja, Heli and Tammela, Antti and Tuisku, Outi and Turja, Tuuli and Aerschot, Lina Van and Verdoja, Francesco and Välimäki, Kari}, year = {2021}, pages = {72}, }